Measuring the Performance of Asynchronous Controllers

Recently I’ve been working with ASP.NET MVC 2.0’s new asynchronous controllers. The idea with these is that you can split the request handling pipeline into two phases:

- First, your controller begins one or more external I/O calls (e.g., SQL database calls or web service calls). Without waiting for them to complete, it releases the thread back into the ASP.NET worker thread pool so that it can deal with other requests.

- Later, when all of the external I/O calls have completed, the underlying ASP.NET platform grabs another free worker thread from the pool, reattaches it to your original HTTP context, and lets it complete handling the original request.

This is intended to boost your server’s capacity. Because you don’t have so many worker threads blocked while waiting for I/O, you can handle many more concurrent requests. A while back I blogged about a way of doing this with an early preview release of ASP.NET MVC 1.0, but now in ASP.NET MVC 2.0 it’s a natively supported feature.

In this blog post I’m not going to explain how to create or work with asynchronous controllers. That’s already described elsewhere on the web, and you can see the process in action in TekPub’s “Controllers: Part 2” episode, and you’ll find extremely detailed coverage and tutorials into my forthcoming ASP.NET MVC 2.0 book when it’s published. Instead, I’m going to describe one possible way to measure the performance effects of using them, and explain how I learned that under many default circumstances, you won’t get any benefit from using them unless you make some crucial configuration changes.

Measuring Response Times Under Heavy Traffic

To understand how asynchronous controllers respond to differing levels of traffic, and how this compares to a straightforward synchronous controller, I put together a little ASP.NET MVC 2.0 web application with two controllers. To simulate a long-running external I/O process, they both perform a SQL query that takes 2 seconds to complete (using the SQL command WAITFOR DELAY ’00:00:02′) and then they return the same fixed text to the browser. One of them does it synchronously; the other asynchronously.

Next, I put together a simple C# console application that simulates heavy traffic hitting a given URL. It simply requests the same URL over and over, calculating the rolling average of the last few response times. First it does so on just one thread, but then gradually increases the number of concurrent threads to 150 over a 30-minute period. If you want to try running this tool against your own site, you can download the C# source code.

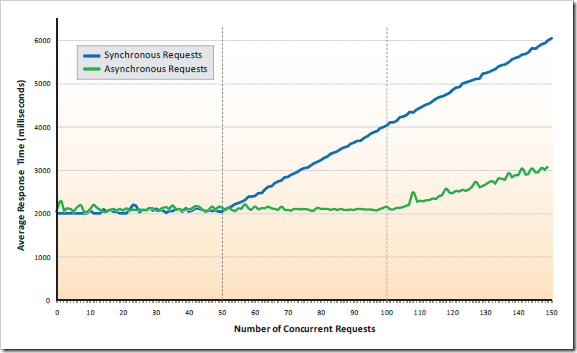

The results illustrate a number of points about how asynchronous requests perform. Check out this graph of average response times versus number of concurrent requests (lower response times are better):

To understand this, first I need to tell you that I had set my ASP.NET MVC application’s worker thread pool to an artificially low maximum limit of 50 worker threads. My server actually has a default max threadpool size of 200 – a more sensible limit – but the results are made clearer if I reduce it.

As you can see, the synchronous and asynchronous requests performed exactly the same as long as there were enough worker threads to go around. And why shouldn’t they?

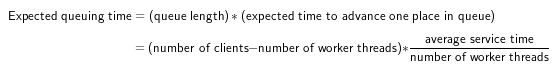

But once the threadpool was exhausted (> 50 clients), the synchronous requests had to form a queue to be serviced. Basic queuing theory tells us that the average time spent waiting in a queue is given by the formula:

… and this is exactly what we see in the graph. The queuing time grows linearly with the length of the queue. (Apologies for my indulgence in using a formula – sometimes I just can’t suppress my inner mathematician. I’ll get therapy if it becomes a problem.)

The asynchronous requests didn’t need to start queuing so soon, though. They don’t need to block a worker thread while waiting, so the threadpool limit wasn’t an issue. So why did they start queuing when there were more than 100 clients? It’s because the ADO.NET connection pool is limited to 100 concurrent connections by default.

In summary, what can we learn from all this?

- Asynchronous requests are useful, but *only* if you need to handle more concurrent requests than you have worker threads. Otherwise, synchronous requests are better just because they let you write simpler code.

(There is an exception: ASP.NET dynamically adjusts the worker thread pool between its minimum and maximum size limits, and if you have a sudden spike in traffic, it can take several minutes for it to notice and create new worker threads. During that time your app may have only a handful of worker threads, and many requests may time out. The reason I gradually adjusted the traffic level over a 30-minute period was to give ASP.NET enough time to adapt. Asynchronous requests are better than synchronous requests at handling sudden traffic spikes, though such spikes don’t happen often in reality.) - Even if you use asynchronous requests, your capacity is limited by the capacity of any external services you rely upon. Obvious, really, but until you measure it you might not realise how those external services are configured.

- It’s not shown on the graph, but if you have a queue of requests going into ASP.NET, then the queue delay affects *all* requests – not just the ones involving expensive I/O. This means the entire site feels slow to all users. Under the right circumstances, asynchronous requests can avoid this site-wide slowdown by not forcing the other requests to queue so much.

Gotchas with Configuring for Asynchronous Controllers

The main surprise I encountered while trying to use asynchronous controllers was that, at first, I couldn’t observe any benefit at all. In fact, it took me almost a whole day of experimentation before I discovered all the things that were preventing them from making a difference. If I hadn’t been taking measurements, I’d never have known that my asynchronous controller was entirely pointless under default configurations. For me it’s a reminder that understanding the theory isn’t enough; you have to be able to measure it.

Here are some things you might not have realised:

- Don’t even bother trying to load test your asynchronous controllers using IIS on Windows XP, Vista, or 7. Under these operating systems, IIS won’t handle more than 10 concurrent requests anyway, so you certainly won’t observe any benefits.

- *If your application runs under .NET 3.5, you will almost certainly need to change its **MaxConcur

rentRequestsPerCPU setting.* By default, you’re limited to 12 concurrent requests per CPU – and this includes asynchronous as well as synchronous ones. Under this default setting, there’s no way you can get anywhere near the default worker threadpool limit of 100 per CPU, so you might as well handle everything synchronously. For me this is the biggest surprise; it just seems like a chronic mistake. I’d be interested to know if anyone can explain this. Fortunately, if your app runs under .NET 4, then the MaxConcurrentRequestsPerCPU value is 5000 by default. - If your external I/O operations are HTTP requests (e.g., to a web service), then you may need to **turn off autoConfig and alter your maxconnections setting (either in web.config or machine.config).** By default, autoconfig allows for 12 outbound TCP connections per CPU to any given address. That limit might be a little low.

- *If your external I/O operations are SQL queries, then you may need to change the **max ADO.NET connection pool size.* By default, the limit is 100 in total. Whereas the default ASP.NET worker thread pool limit is 100 per CPU, so you’re likely to hit the connection pool size limit first (unless, I guess, you have less than one CPU…).

If you don’t have high capacity requirements – e.g., if you’re building a small intranet application – then you can probably forget all about this blog post and asynchronous controllers in general. Most ASP.NET developers have never heard of asynchronous requests and they get along just fine.

But if you are trying to boost your server’s capacity and think asynchronous controllers are the answer, please be sure to run a practical test and make sure you can observe that your configuration meets your needs.